To Avoid Accusations of AI Cheating, College Students Are Turning to AI

College campuses across the United States are witnessing an unexpected twist in the ongoing evolution of artificial intelligence: students leveraging AI tools to prove their own humanity. As educational institutions grapple with the rising tide of AI-generated content, a novel industry of AI “humanizers” has emerged. These tools aim to modify writing in ways that make it appear authored purely by human effort — even if it wasn’t.

The rapid adoption of generative AI technologies, fueled by the likes of ChatGPT and similar tools, has led to unprecedented challenges in academia. While these advancements were expected to aid learning, they’ve instead ignited concerns about cheating and plagiarism, creating what some are calling an “AI arms race.” But, as it turns out, students accused of misusing AI are sometimes turning to AI itself to defend their originality. This paradox is reshaping how academic integrity is policed and protected — with implications far beyond the classroom.

The Emergence of AI “Humanizers”

Humanizer tools are the newest players in this academic standoff. These programs analyze text and alter it to appear less robotic, sidestepping the scrutiny of AI detection software. Some are free, while others charge users upwards of $20 per month for their services. Their rise is closely tied to the frustrations voiced by many students who have faced baseless accusations of academic dishonesty.

“Students now are trying to prove that they’re human, even if they’ve never touched AI,” said Erin Ramirez, an associate professor at California State University, Monterey Bay, in an interview with NBC News. She described the current climate as a “spiral that will never end,” where efforts to enforce integrity only push both students and educators further into murky ethical territory.

One example is Aldan Creo, a graduate student at the University of California, San Diego, who studies AI and its impact in higher education. “If we write properly, we get accused of being AI — it’s absolutely ridiculous,” he said. “Long term, I think it’s going to be a big problem.” For these students, the use of humanizers isn’t a shortcut to cheat but a means to avoid miscarriages of justice.

The Shortcomings of AI Detectors

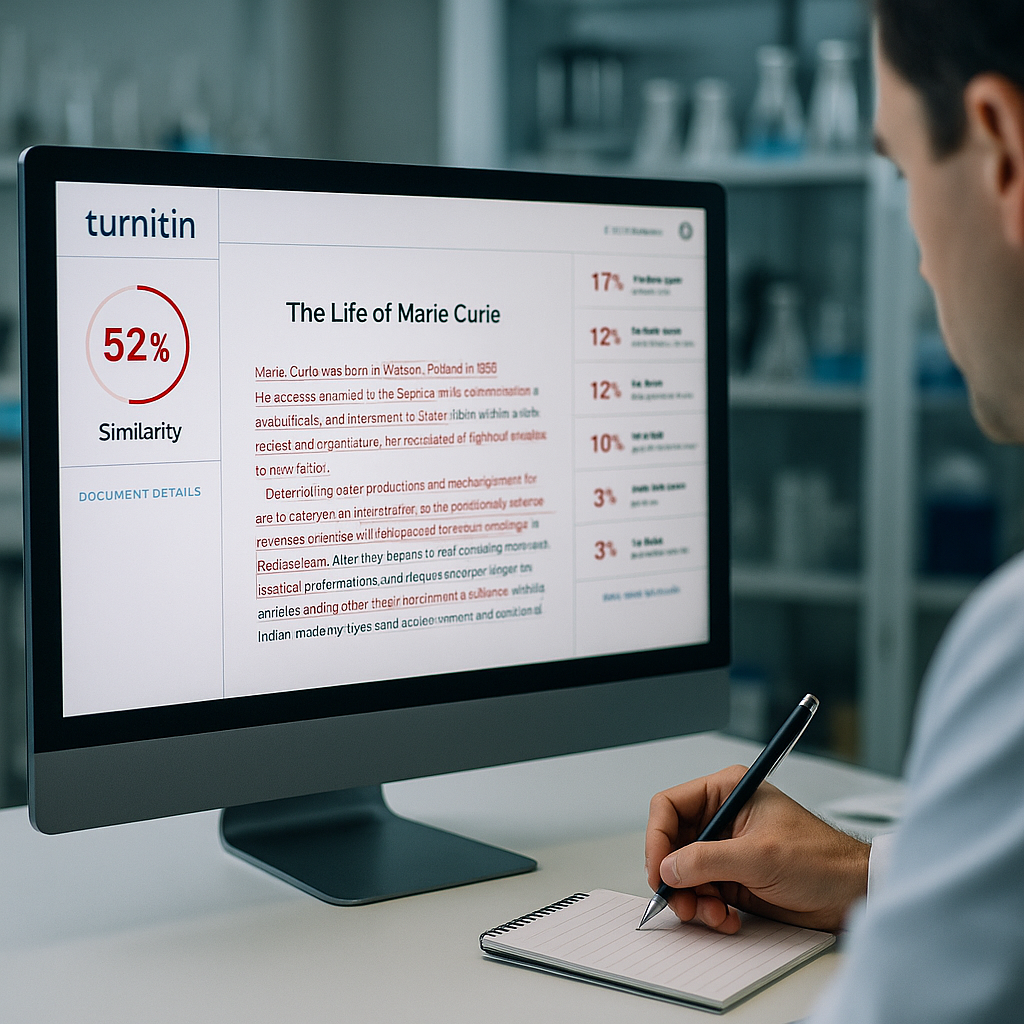

AI detection software, such as Turnitin and GPTZero, has become a popular tool for professors seeking to ensure academic honesty. However, these programs are far from perfect. Critics argue that their algorithms often overreach, flagging legitimate student writing as AI-generated. According to NBC News, multiple lawsuits have already been filed by students against universities, alleging emotional distress caused by erroneous accusations of using AI.

This issue becomes even more pronounced among non-native English speakers. Studies suggest that AI detection tools disproportionately flag their work as suspicious, creating barriers that go beyond technological flaws. “The biases baked into these detectors are profound,” noted an industry observer interviewed by Reuters. “What was meant to be a safeguard has now become a tool perpetuating inequity.”

Nevertheless, the development of ever-more sophisticated AI and detection methods continues. Companies like Turnitin and GPTZero are evolving their software to catch writing that’s been filtered through humanizers. In turn, humanizer developers tweak their algorithms to bypass these updates, creating a technological cat-and-mouse game with no clear endpoint.

Students Walking a Fine Line

The increasing complexity of this dynamic raises uneasy questions about fairness and ethics in academia. Students who use humanizers often operate in a moral gray area. Some may indeed be trying to cover up AI-aided cheating. But for many, the tools serve as a defense mechanism against poorly calibrated AI detection programs rather than an intentional way to deceive educators.

“My entire future depends on proving I haven’t cheated,” reported one college sophomore interviewed for the original article. “I’d never used AI for my work until last semester when I started running my essays through a humanizer. It’s not because I’m cheating — it’s because I can’t afford false accusations.” For such students, the use of these tools is about ensuring life-changing prospects, such as scholarships and academic standing, remain intact.

Institutions, for their part, are reluctant to abandon AI detection programs entirely. With limited resources and mounting workloads, educators increasingly rely on technology to verify academic integrity. “It’s not perfect, but it’s what we have to work with right now,” said one unnamed professor cited in TechCrunch’s analysis of AI-fueled plagiarism.

Implications for the Future of Academia

The escalating tension between AI innovation and academic fairness signals deeper issues for universities. Beyond simply detecting or correcting cheating, the phenomenon raises fundamental questions about how education values originality and creativity in a world increasingly suffused with automation.

Many educational leaders are calling for a paradigm shift. “We need to move beyond treating AI as something foreign to learning,” said a policy analyst cited by Forbes. “Instead, institutions should teach students to engage with these tools ethically, just as they would with traditional research methods.” This perspective suggests AI integration could become less adversarial in the future, reshaping curricula to embrace creativity and technological nuance rather than resist them.

Still, the growing dependency on AI cannot be divorced from larger societal concerns. “What happens when humanizers outsmart detection programs entirely?” pondered an AI ethics researcher outlined in the same NBC News report. “Eventually, these constant back-and-forth upgrades may force us to rethink the larger educational model of essays, exams, and grades.”

What Comes Next?

As AI technologies advance, the uneasy coexistence of detection software and humanizers will likely continue to dominate academic debates. Universities may face increased legal challenges from students claiming unjust treatment, while educators will grapple with the ethical dilemmas of relying on imperfect technological tools.

In the long run, finding a middle ground may require transparency and collaboration among all parties involved. Some experts suggest adopting tools that track writing activity without compromising privacy or implementing workshops to help students and professors better understand the scope and limits of AI technologies.

The ultimate question remains unanswered: Who carries the responsibility to adapt — students, educators, or technology providers? Only one certainty exists: the rise of AI has left an indelible mark on academia, forever altering how institutions navigate the intersection of integrity and innovation.

For now, students will likely continue to rely on humanizer tools, not only to sidestep accusations but to protect the most basic stakes of their academic journeys. And as the war between detection systems and humanizers rages on, the underlying tension reflects a broader societal struggle with the double-edged sword of artificial intelligence.